RELATED LINK:

Previous experiment on spatial live set: GAP+ Architecture of Sound

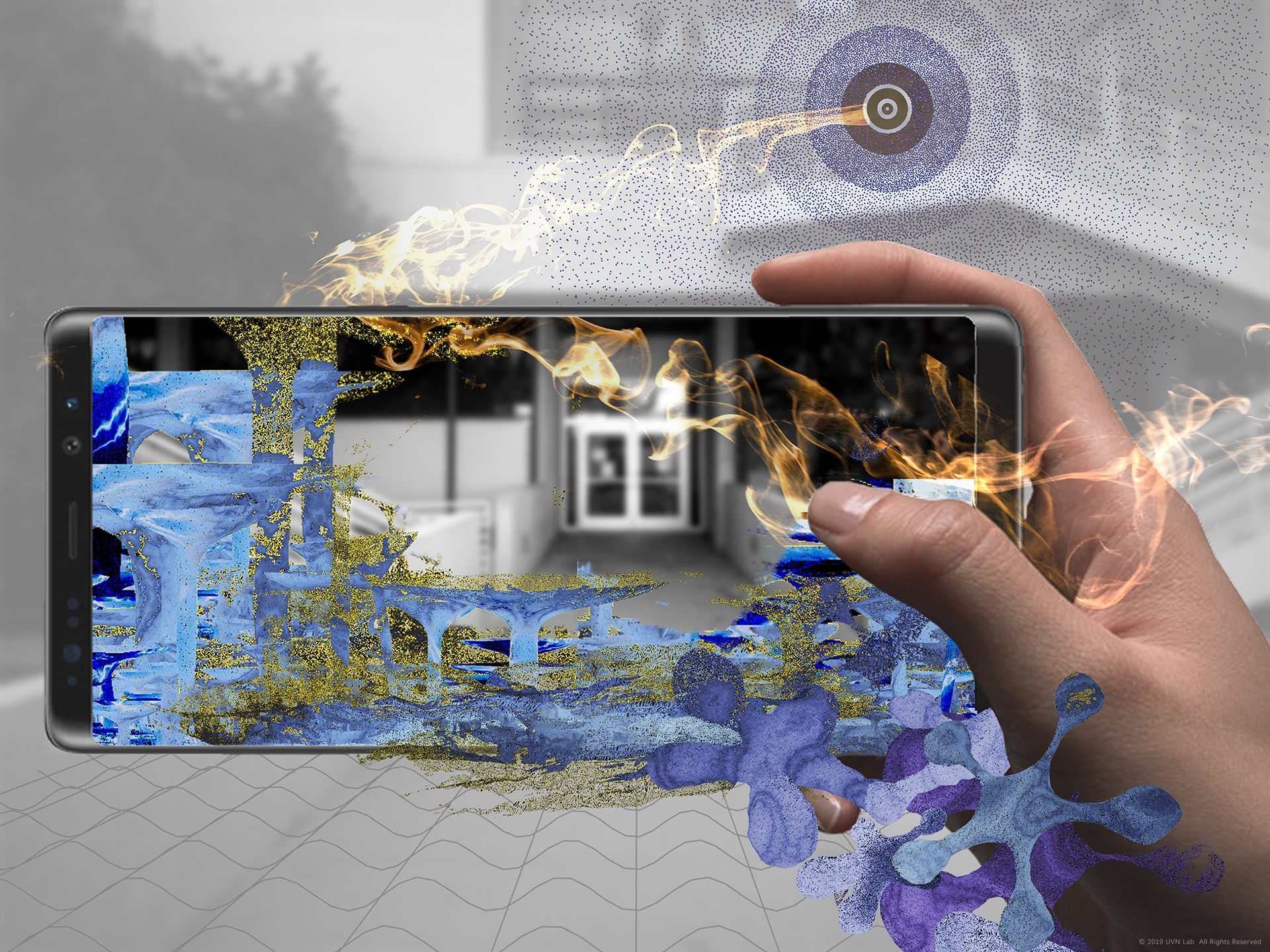

■Acoustic Garden applies spatialized sound synthesis to the physical world with handheld AR.

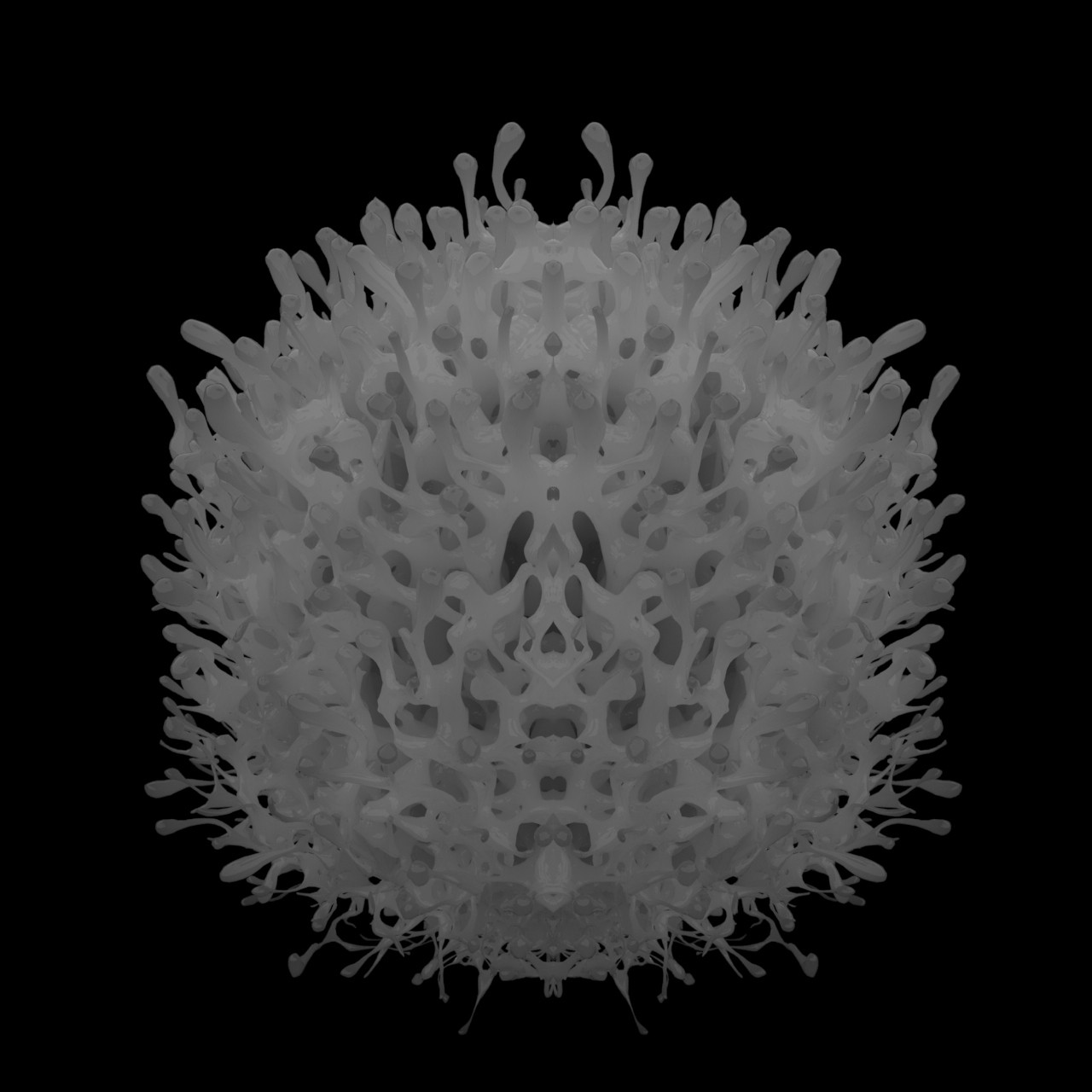

It’s an invisible virtual world,

Listen, and discover

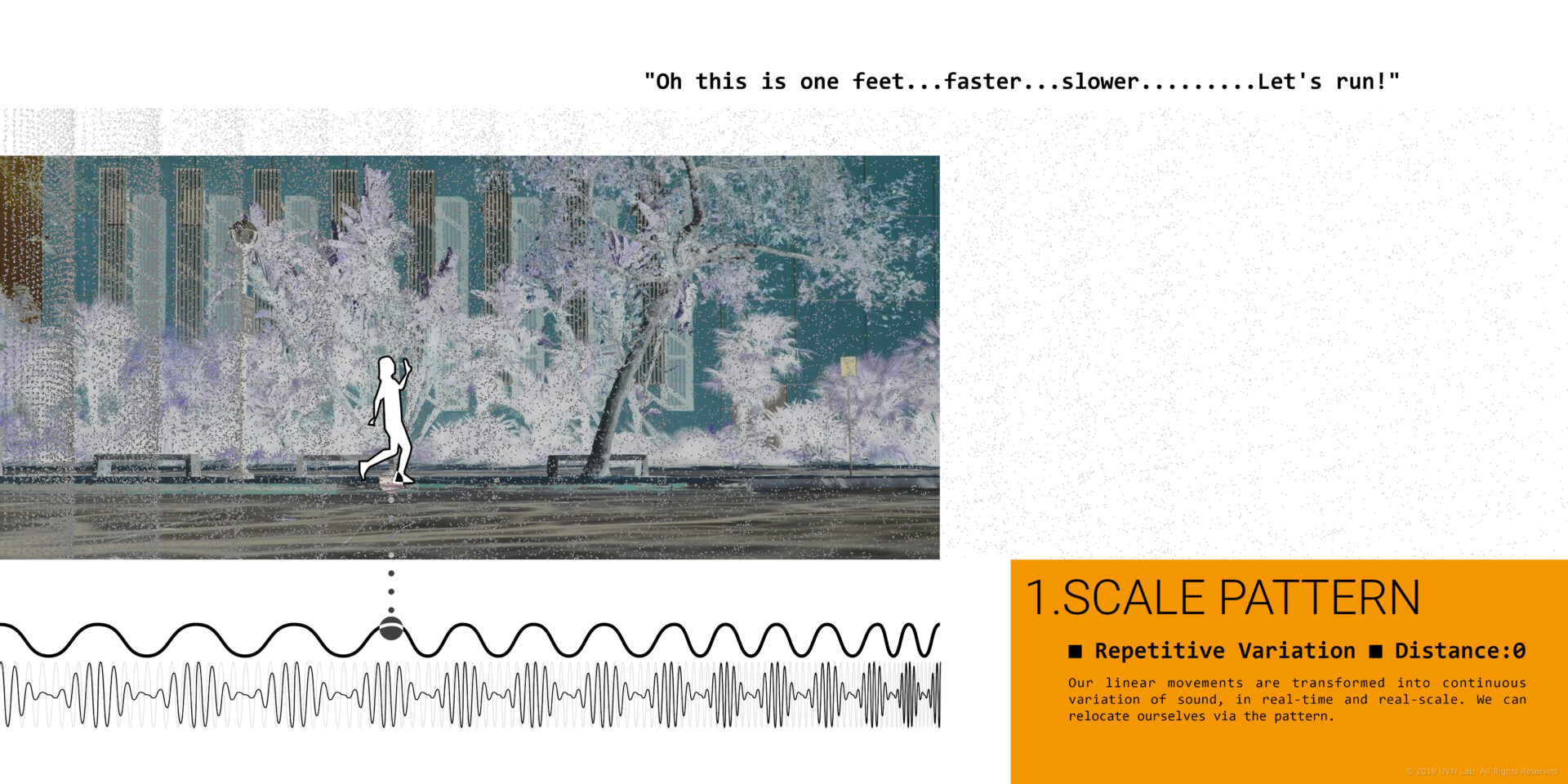

our movement reshape the acoustic space

Find the sound

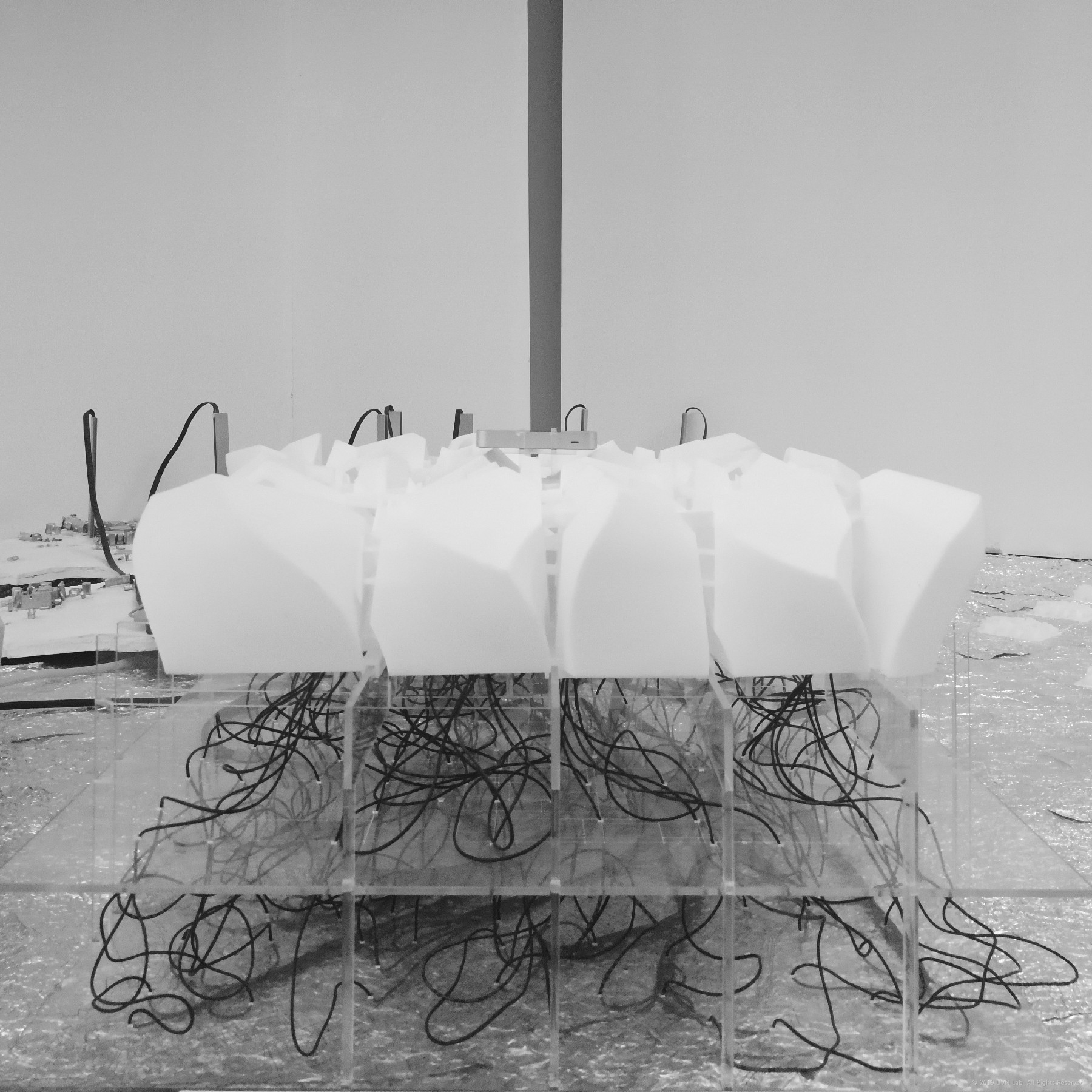

a virtual garden “bloom” above the reality

■DOWNLOAD

Playable prototype powered by Unreal Engine, ARCore & ARKit.

*ARCore required for Android devices.

■AUGMENTED ACOUSTIC SPACE

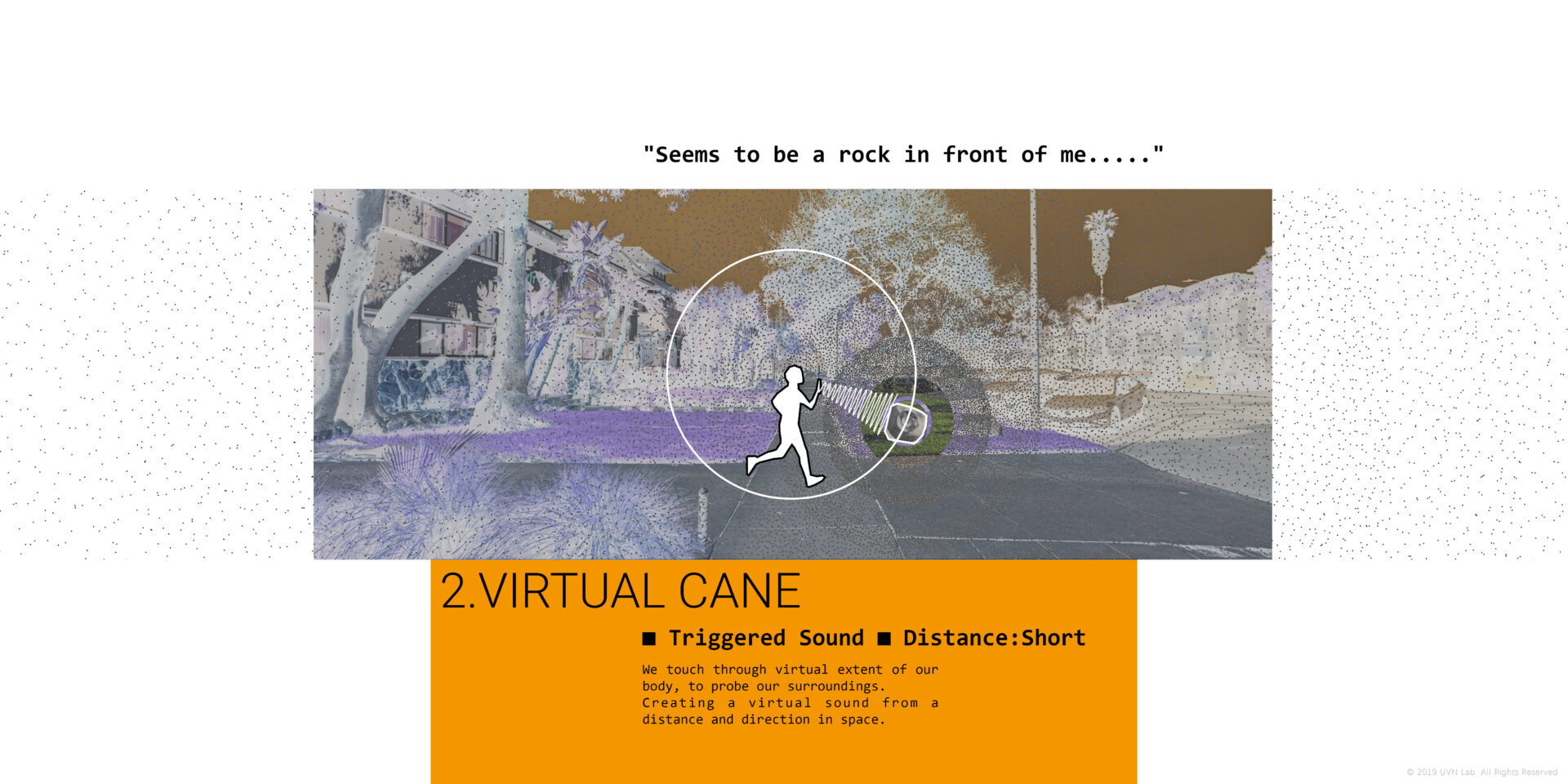

What is spatialized narrative for acoustic world in the augmentation era?

Acoustic driven narrative, exploring beyond visual cues.

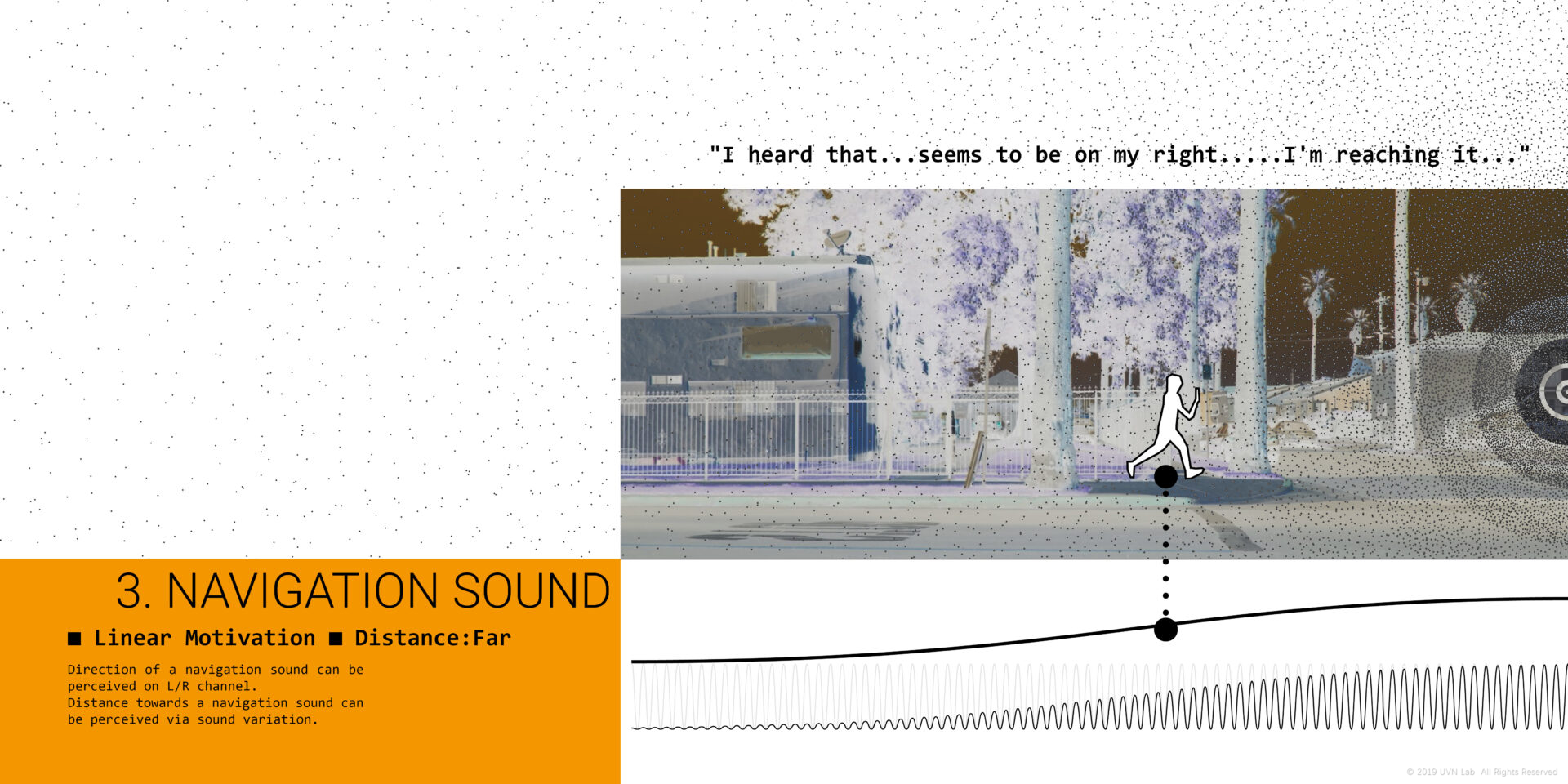

Spatial sound for navigation, providing motivation for the whole narrative.

Spatial control over multi-track parallel modulation, various mod curves and fx distortions.

Users can easily perform multiple layers of acoustic narrative in real-time and real-scale, and by following sound they are free to navigate around, even without any visual features. Powered by Unreal Engine, this project well integrates Spatialized Mastering, Spatialized Modulation and physic-based audio.