Intro

This is a story about deviations, errors, and recursions.

In late 2018, when I was preparing my portfolio for master degree, I was thinking about a question – it’s a common thing when we consider issues in a perfect condition and introduce imperfections later as we move into physical world. Errors are always defined as problems to be solved, no matter they are toy blocks we spent a day to assemble in childhood, or inevitable noises in photos we take in dark environments, or bugs and errors caused by seemingly accurate manufacturing tools…… Anyhow, these were what I thought about everyday, which even became a topic between me and my instructor – since design is developed under constraints, can we turn errors into something interesting? Finally, I put this image in the last page of my portfolio, to summarize these random ideas and announcing a possible branch of my future researches. Well, I have to admit, this was just too confusing.

I, capture myself

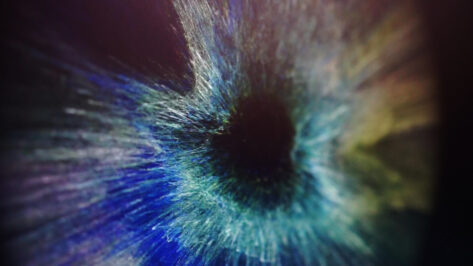

This story began with a classic way of playing around with cameras – recording what camera recorded with a camera. For example, we can get a series of visual effects by recording screen with a connected webcam, or recording projected content with a camera connected to the projector, or use front camera of a phone to record the mirror…… Further the distance between the screen and camera is, longer the “space” between them we can see. If we put them closer, any interference in reality will be infinitely magnified and become extremely dark and extremely bright high-contrast flashes. Even a little error will accumulate quickly, producing a highly-distorted image. If we put external objects between them, a series of flashing shadows will be recorded and fade away by frames. Results may vary, based on different display devices.

The slight difference that accumulates over time

Since there is too much interference in reality, what about completely virtual projection?

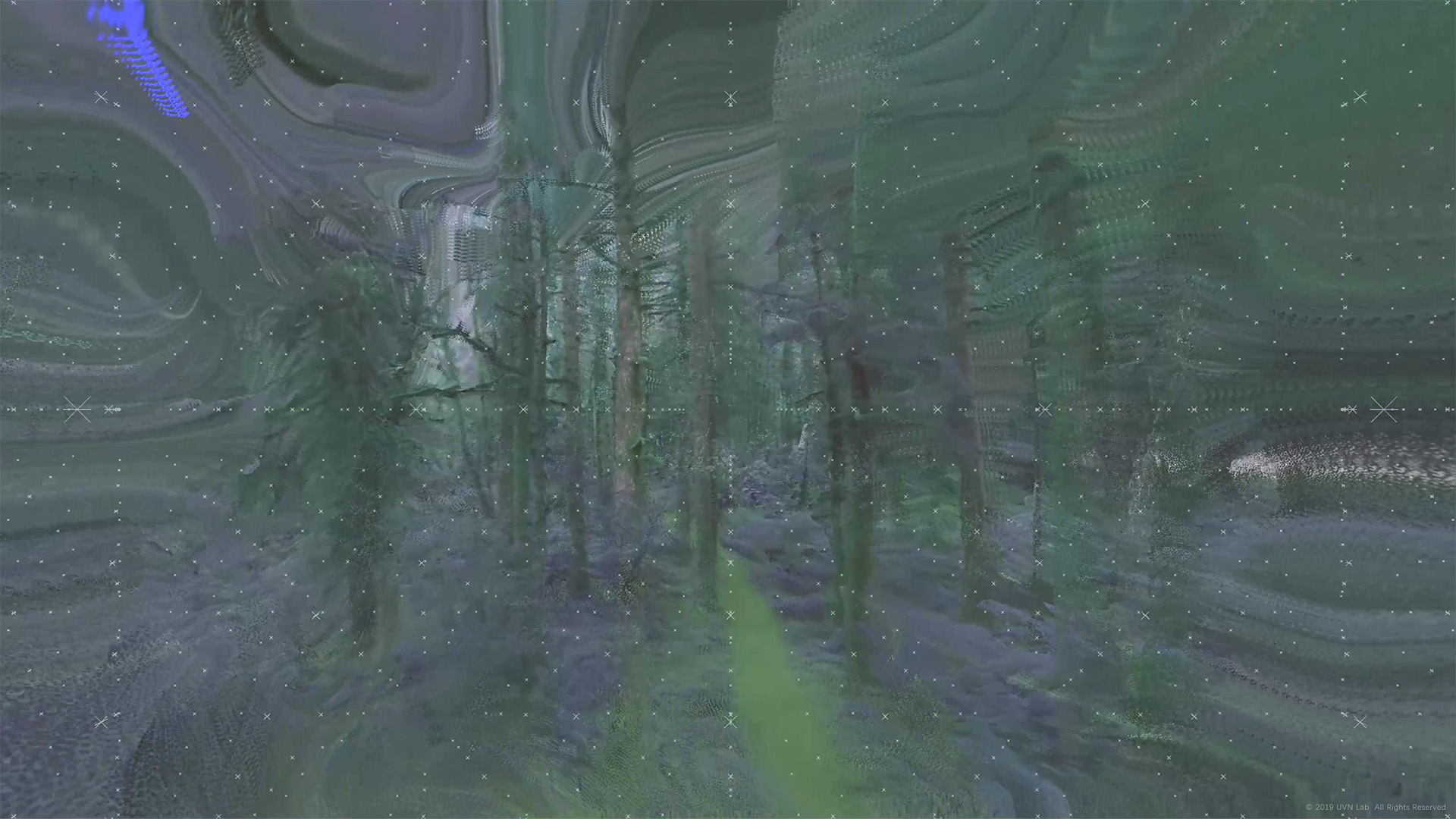

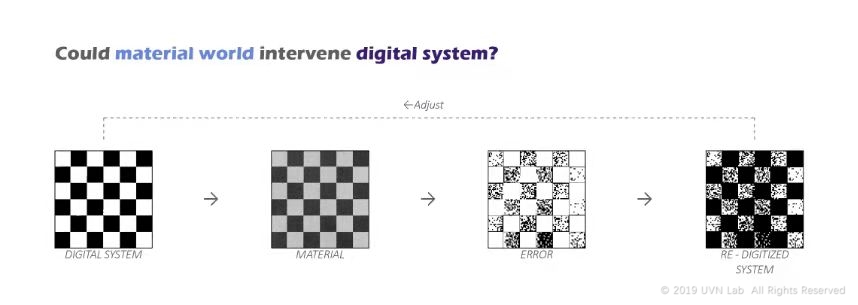

Soon it was the summer of 2020, in an accidental experiment, I recalled this question. Then I attempted to play this game in a totally virtual way. When I tried to project the content captured by virtual camera, onto the background scene of unflatten geometries, the slight difference and deviation became much more operable. In moving perspectives, image from last frame are not fully aligned with the current scene – pixels are slightly, unevenly moved away, forming a distorted image projected on the scene. Finally the projected scene is captured again and further distorted.

Not only the slight difference in our perspectives will cause huge distortions, but also the forms being projected will directly lead to completely different color flows. The changing background shape defines how the “fog” flows, just like a classic optical Flow algorithm. Just like a light tracing and bouncing on the surface, smaller the angle between the projection and surface is, faster the color flows, meanwhile the direction of color flow is guided by the direction of surface.

Over time, errors and differences are accumulated, turning into drastic visual distortion. I name this project as “Focal Lapse“, which inherits Time Lapse, referring to accumulated deviations by changing focal over time, as well as an exploration of such error and deviation. Uneven spatial forms and changing perspectives are key factors that drive such accumulation, which also change our perception over the original space and objects.

Such visual effect driven by perspectives, reminds me of another commonly used modelling technique – photogrammetry, which also constructs scene by comparing deviations between neighboring frames. If we say previous modelling technologies are manually abstractions and selections, nowadays technologies like photogrammetry are more automatic, by documenting massive messages through first perspectives, objects and spaces are recognized as a semi-real status. What are we going to see, if we put these two together?