Acoustic Garden

As the first experiment of Acoustic Garden Program, this project presents an “invisible” journey through nature, under the guidance of spatial audio in AR/VR. When the visual world is hidden, sound becomes the core of narrative and guides us to rediscover our surroundings. As we listen, and discover, our movement distorts the soundscape in turn, constructing an interactive musical narrative along footsteps. Finally, as we find the sound, an artificial garden “bloom” for us.

Sounds guide movements, and movements reshape sounds, delivering a non-linear, ever-evolving and real-scale musical experience in AR/XR. With the core concept of spatial music structure and DRAEM (Distance Related Audio Effect Modulation), it shows great potentials in spatial music, beyond traditional graphic synthesis and sonification.

The research focus on decision making support and accessibilities through sensory augmentation in physical world. In collaboration with echolocation expert and blind person, the research reflect challenges on audio-driven narrative/culture, hardware advancement, neuroscience process and social justice in technologies.

2021

USC Grad Thesis

Built with Unreal Engine/MetaSound

Artist/Director – Yufan Xie

Instructor – Lisa Little

2021 Epic Megagrants Recipient

2021 A+D Design Awards, “In School” Category

2021 The Acoustical Society of America – Bradford Newman Student Medal – Merit in Architectural Acoustics Award

A human perspective upon urban space through sound

In the world of garden, bees do not rely on vision to locate flowers, but guided by pheromones carried in the air. They glide through the garden, alighting gracefully on each blooming flower. This process symbolizes a deeper mode of perception, resonate with today’s augmented human perception, enabling alternative ways to sense and understand the world.

Spatial Music Innovation

In AR/XR design, spatial audio and audio-driven narratives are generally considered as secondary roles. Non-visual content production and research for visually impaired users are underrepresented. Similarly, in the field of music, visual interfaces dominate discussions on gesture-based sound synthesis, leaving intuitive, audience-driven experiences unexplored. Historically, studies in architecture and music focused on spatial and musical sequences, but they largely remained confined to print media representations.

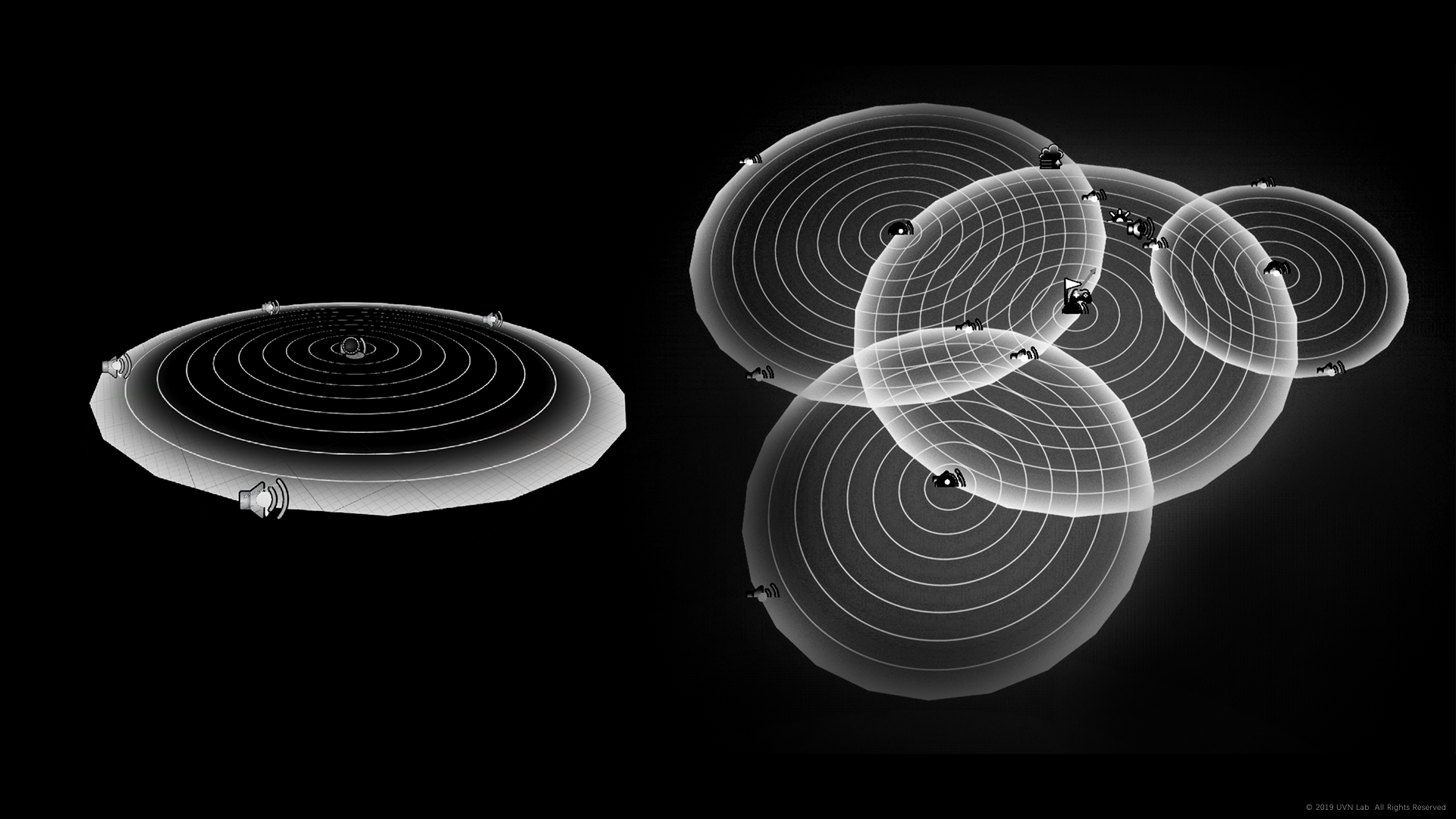

In this project, inspired by the progressive structure of electronic music, we introduce a spatialized sound synthesis method based on distance-related audio effect modulation combined with binaural spatialization. This approach is intended to navigate users through space without depending on visual indicators, utilizing auditory cues from a multitude of virtual audio objects instead. These objects are responsive to user movements, providing immersive musical experiences on a walking scale. Interaction with specific audio objects enables users to dictate different musical progressions, which follows a self-similar spatial structure.

Unlike traditional sonification methods that mainly replicate or translate data, our approach emphasizes the emotional impact of auditory messages. It unveils the potential for audience-involved, spatially-driven musical narratives. Testing across various platforms, we faced challenges in sound design, hardware limitations, and cognitive processing.

Awards

SIGGRAPH 2024

At 2024 ACM SIGGRAPH Immersive Pavilion, the Spatial Music project Acoustic Garden showcased to a big group of audience.

This is a remastered version of my previous USC Grad Thesis instructed by Lisa Little , exploring how we seek visual stimulation under the guidance of sound in a hidden world, and how our movement produce interactive musical progression with DRAEM(Distance-Related Audio-Effect Modulation).

Production support Wei Wu.

Publication: Acoustic Garden: Exploring Accessibility and Interactive Music with Distance-related Audio Effect Modulation in XR

RESEARCH PROGRESS

STAGE 1

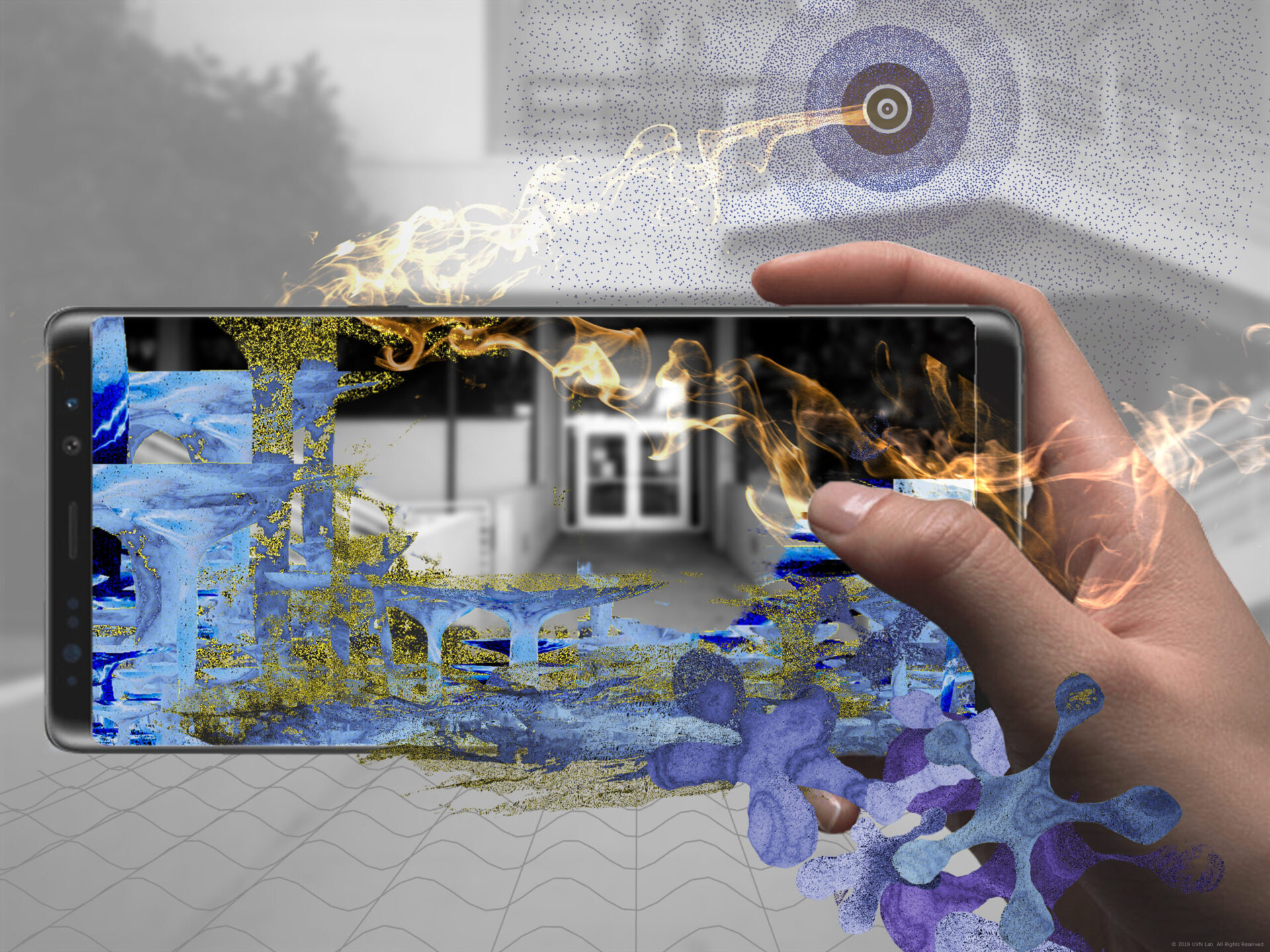

Prototype Test on Screen

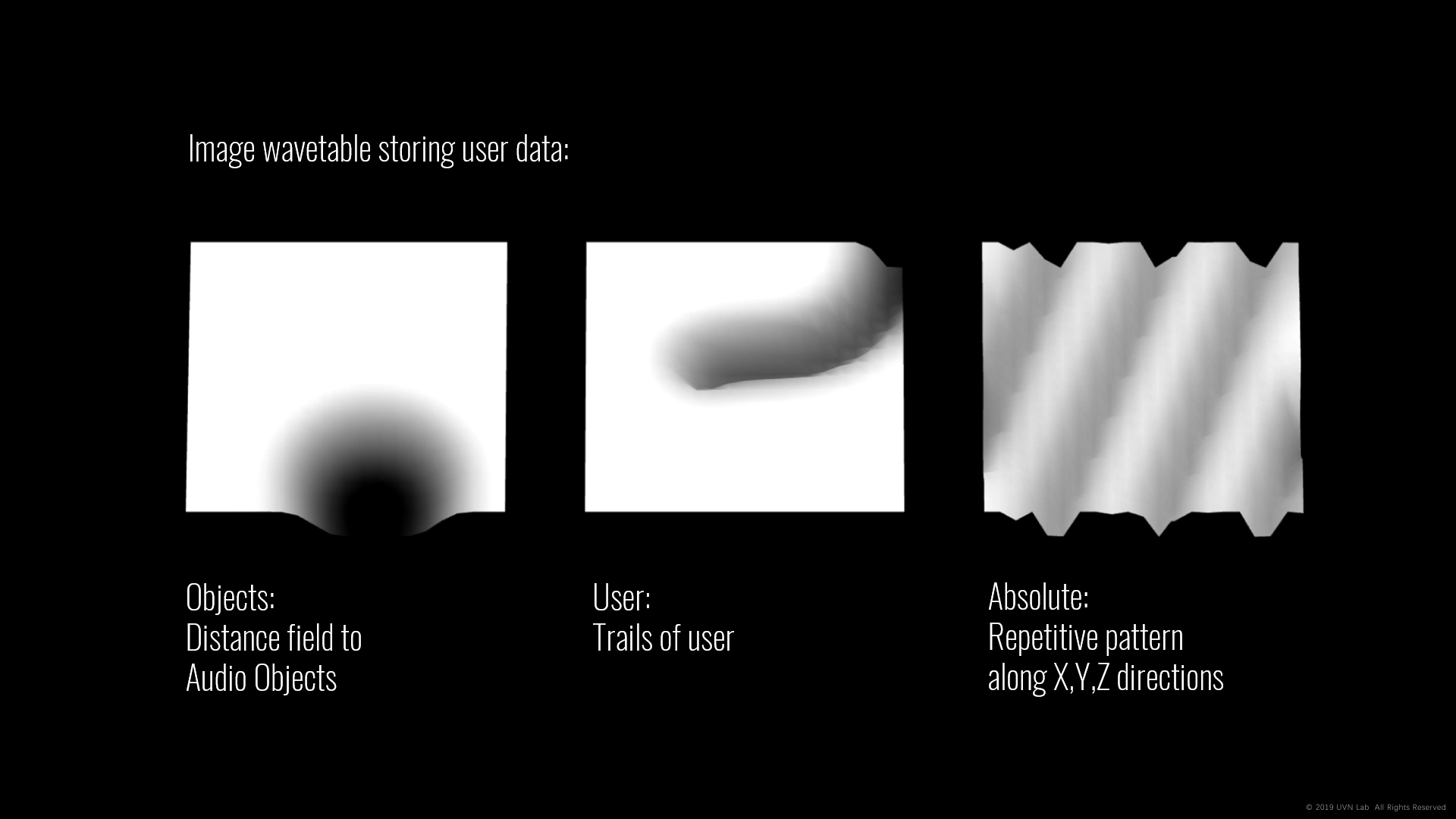

- Spatial data as a wavetable

- Disable volume attenuation, use distance related modulation to inform relationship to audio objects

Result:

Users rely on visual interface and visualized target,

Amplitude attenuation for spatialized audio create excepted silent area for user, and users get disconnected

STAGE 2

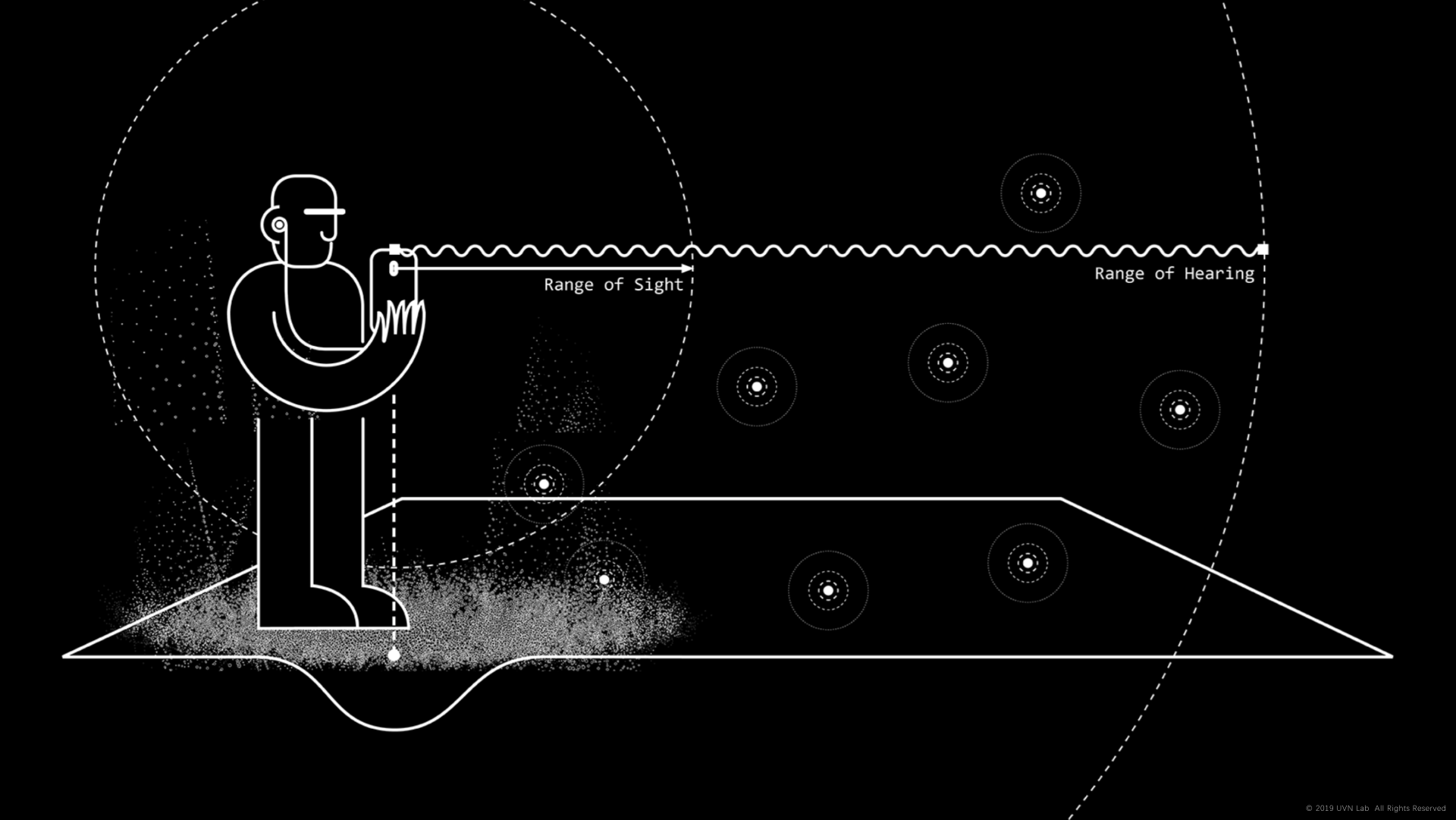

- Audible range beyond visible range

- Disable volume attenuation, use distance related modulation to inform relationship to audio objects

- Using Bone Conduct/non-immersive headphone to avoid potential danger for blind person

Result:

- User affected data duplicate with their distance to object and conflict with absolute data distribution

- Sometimes user lose tracking during the silent gaps between audio cues

- Repetitive pattern reflects non-incremental variations, confuse user and disconnect with their movements

STAGE 3

- Removing repetitive pattern along X,Y,Z directions

- Direct distance to Audio Object for fx modulation

- Simple audio design for accessibility

Result:

- Audio Spatialization is still delayed

- Message overload and readability

- Readability vs experience Accessibility vs Affective

STAGE 4: Test on Headsets

To be continued……

PAST RECORDS:

Accessibility, and spatial experience via interactivesounds

2021.04.26

Visual Test on Street

2021.04.10

Multi-Sequential & Multi Chapter Soun Narrative Test

2021.04.01

2021.03.14

Multi-Sequential Test for Musical Narrative

2021.03.14

Multi-Sequential Test for Musical Narrative

2021.01.30

Spatial Data Sonification Test