Responsive Sonic Space in Architecture

This is an innovative project based on author’s earlier exploration on GAP+ Architecture of Sound, aiming at next-gen interface/system development for responsive sound environment.

Brief

The software is the first multi-user platform which allows you to design responsive 3D space with architectural definitions. The system is specialized for data transmission and real-time content in architectural space. It reveals an augmented acoustic world for everyone.

From architects

Draw you own responsive environment with architectural interface!

Sound navigations will largely improve the experience for blind community, as well as for everyone.

For musicians

From space definitions, parameters and signal circuit can be bind to user behavior inputs.

Now you can use your body like a Theremin in soundwalk! Music scenes and collaboration can be easily controlled in a shared space!

To everyone

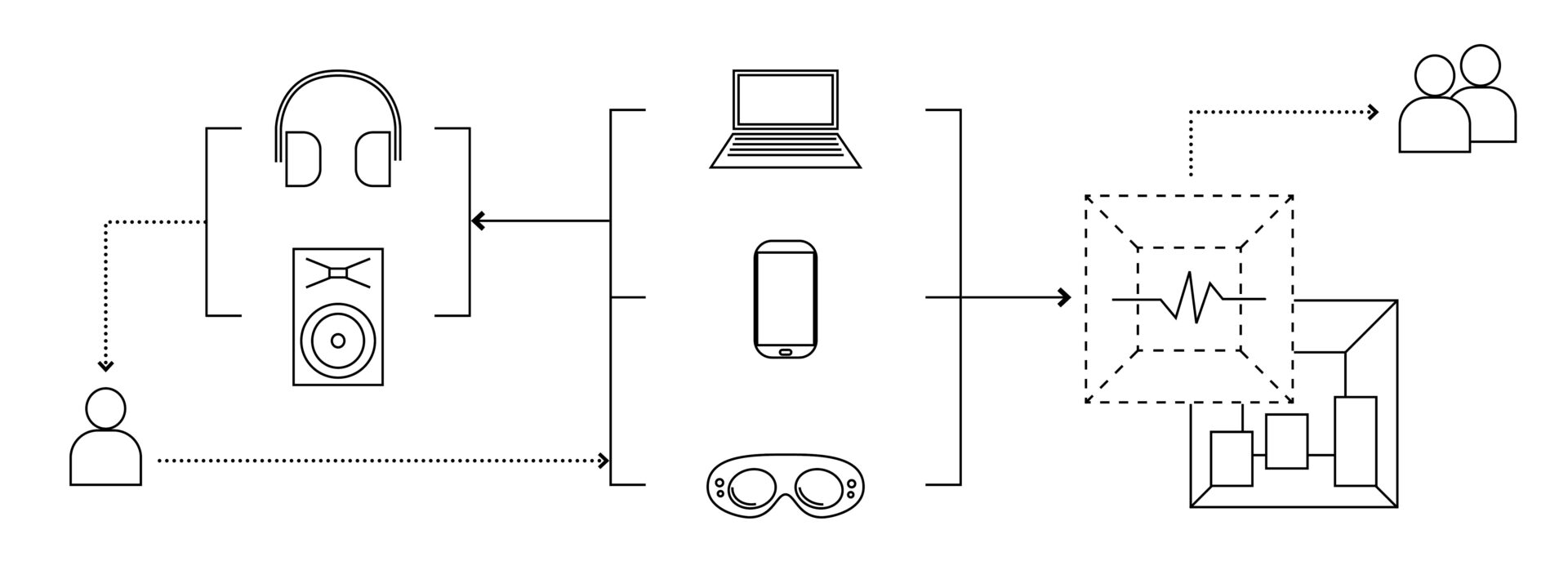

Sounds can be output to devices like earphones, and experience through real-time.

Everyone is the player of your soundscape! Share and enjoy sound toys you made with your friend now!

Core mechanism:

Through user’s location and behavior, modulations can be sampled and passed from space definitions(zone/field) to space elements(visual/sound), generating various scenes.

Target Platform:

PC/MacOS, IOS(ARKit), Magic Leap

What is the future of interactive architecture? What is the future of music interface?

Check pdf below for detailed proposal.

Framework

How do we design a responsive sound environment in an architectural way? How does temporal media like sound impact our perceptual world in spatialized interactivity?

How does architecture integrate concepts from other industries?

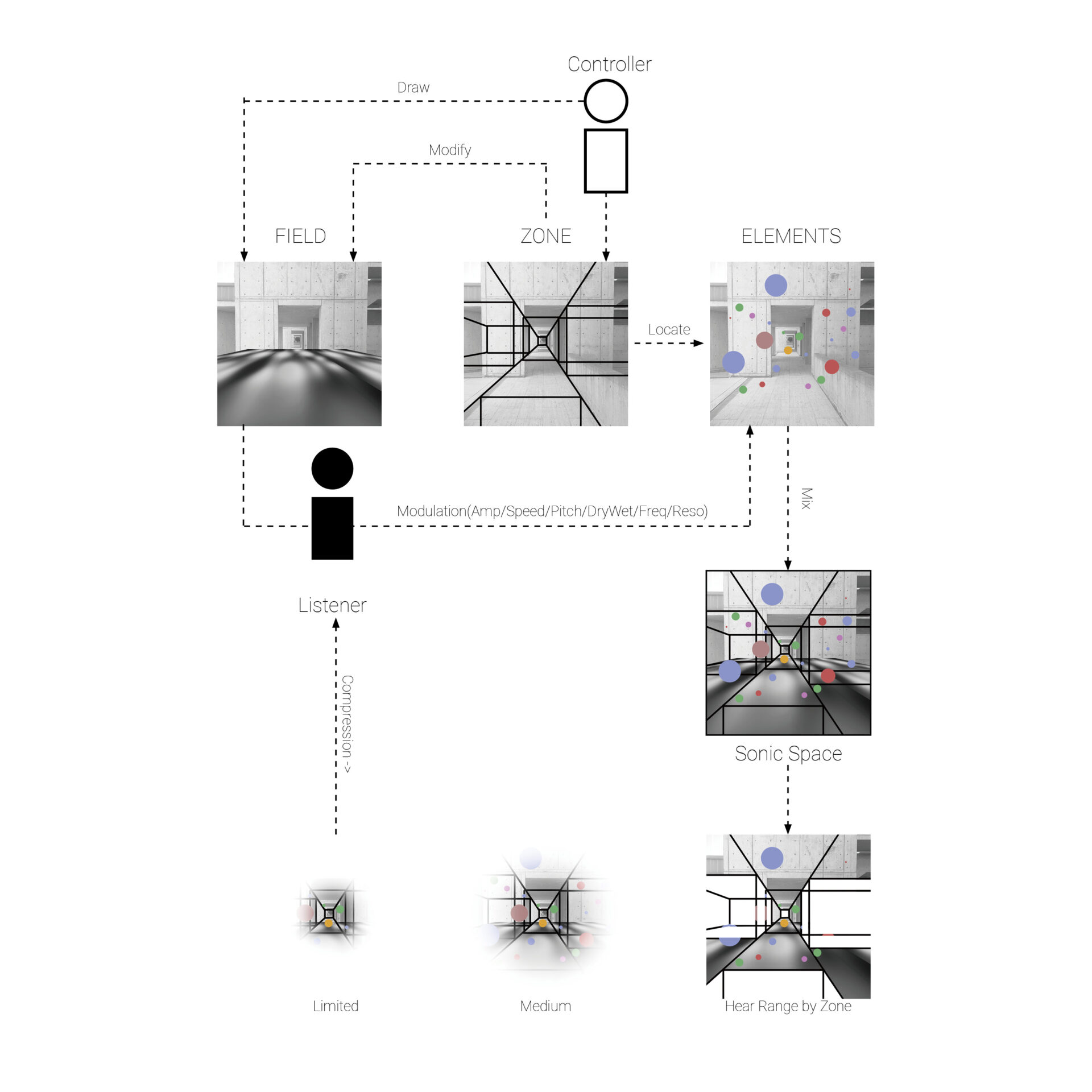

For Architects, “zone” is to define boundaries, “field” is to describe distribution of parameters.

For Musicians, “scene” is to define a combination of sound, “modulation” is to control parameters of sound.

As they are combined together, “zone” defines macro attributes of a specific 3d area, “field” defines their variation in different locations.

When we move through a 3D space, we can switch between “scenes, which construct different events on our timeline

Based on locations we/objects are, parameters can be read from the “field,” modifying messages we receive.

As users alter these spatial definitions, an interaction happens between user and space, as well as between user and user. This is exactly what architectural drawings like Manhattan Transcript trying to argue.

The future of music interface

- Spatial interface and collaboration

- Complex user input

The future of Interactive Architecture

- Hyper dynamic media for instant experience

- Augmented reality

Why need it?

Acoustic world is the future of interactive architecture, that sound scenes can be far more tangible than visual/material space.

How to do it?

Unreal engine has provided all support for sound processing and spatialization.

Who does it serve?

Visual and audio performers, blind community, exhibition executors, architecture designers, and anyone interested in sonic space.

Engineer it

or

PLAY IT.

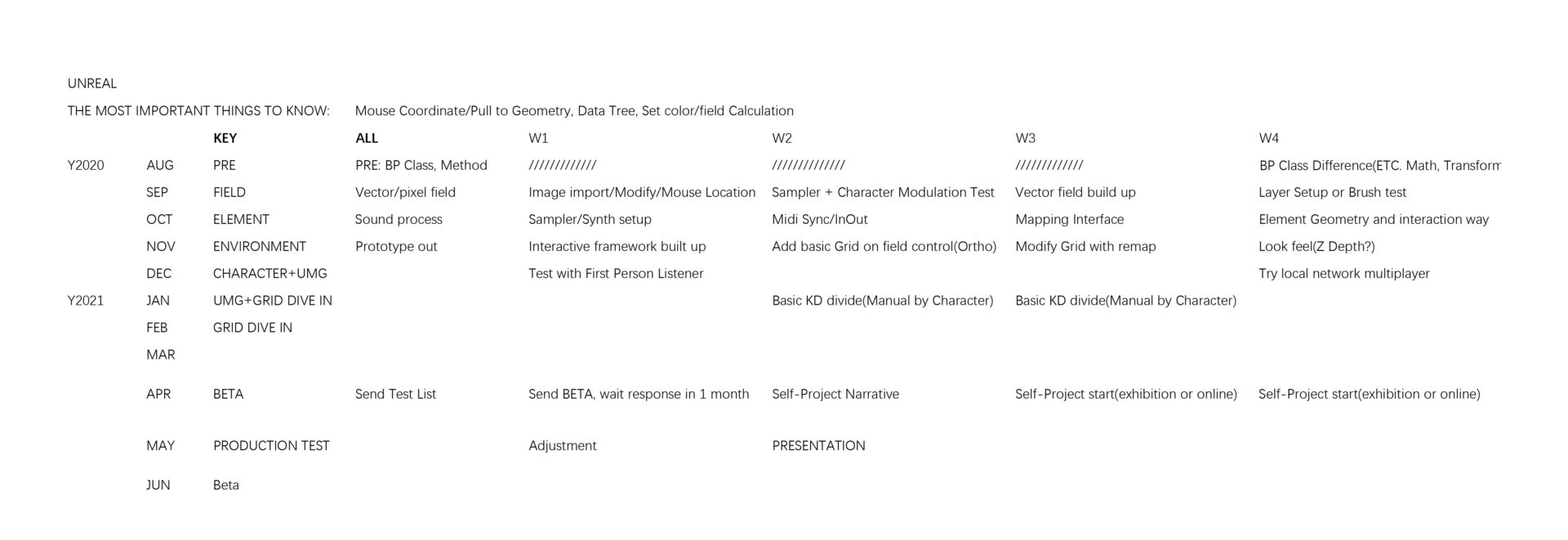

When to release?

The project is in prototype stage, field class is under test.

Before 2021: First usable build for basic object creating and mapping

2021 spring: Test for musician and sound designers.

2021 summer: Open test will be released. Multiplayer will be take into development.

See also:

Research Essay

Plan & Progress

This version is a cleaned up one:1. Zone and Field are merged into one single “space” class, which can adhere field layer and zone layer. 2. Sound Element and Visual Element are merged into one single “Object” class, as an openframework carrying acoustic layer and visual layer. Object itself is a trigger and receiver, messages from other objects can effect on it.(Now there’s no difference between trigger and elements, it despends on what bahavior are added to it.)

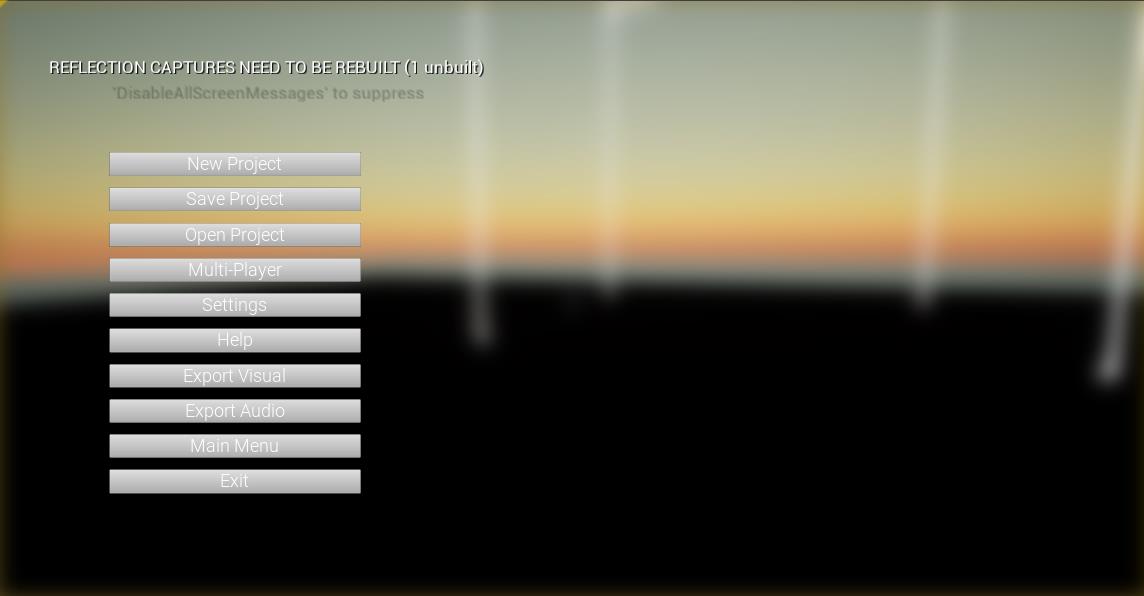

Mouse Click Menu are spawn near player while editing zone and field channel, and spawn near target while editing visual/sound elements. Mouse Click Menu will be used to modify actors in different edit modes. TAB is bind to switch Edit Mode – 1. edit geometry(size, shape, rotation) 2.edit data(Modulation mapping, mapping range, mapping target etc.)

Lag is obvious after running ue for a whole day, RAM overdriven.